[ad_1]

The field of natural language processing (NLP) has seen rapid advancements with the introduction of transformer-based models. FLAN-T5 is one such significant contribution, released by Google and documented on the Hugging Face platform. Standing out as an enhanced version of the original T5 (Text-to-Text Transfer Transformer), FLAN-T5 is designed to be even more versatile and powerful.

What is FLAN-T5?

FLAN-T5, introduced in the paper “Scaling Instruction-Finetuned Language Models,” represents a leap forward in instruction-finetuned models. It is a model finetuned on a mixture of tasks, which allows it to comprehend and generate human-like text based on given instructions. Unlike its predecessor, FLAN-T5 can be utilized directly with pre-trained weights without the need for additional finetuning, making it highly accessible for various NLP tasks.

Key Features of FLAN-T5

FLAN-T5 includes the same improvements as T5 version 1.1, which brought significant enhancements to the original T5 model. Google has made several variants of FLAN-T5 available, including:

- google/flan-t5-small

- google/flan-t5-base

- google/flan-t5-large

- google/flan-t5-xl

- google/flan-t5-xxl

These variants cater to different needs, from small-scale applications to large, complex tasks requiring immense computational power.

How to Use FLAN-T5

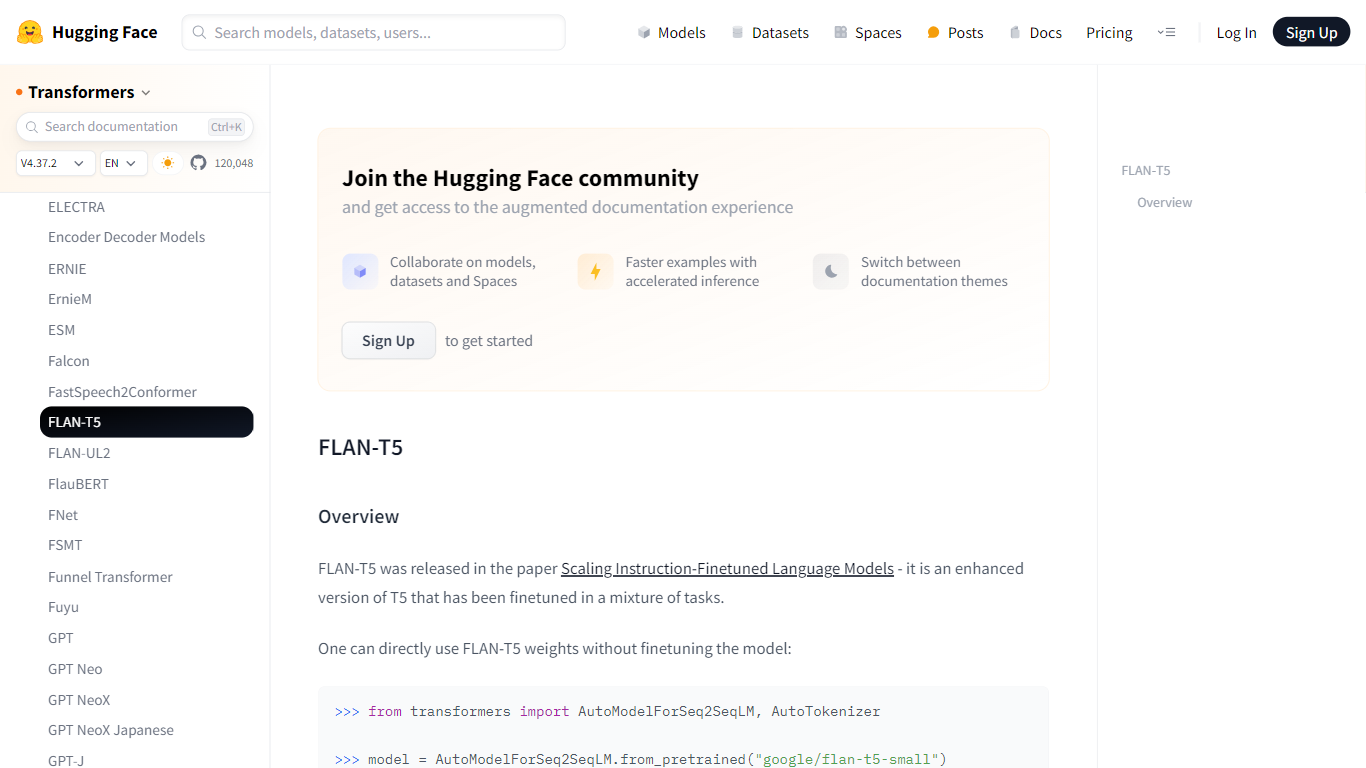

Using FLAN-T5 is straightforward, thanks to the Hugging Face transformers library. The following code snippet demonstrates how to utilize the model for generating text:

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

model = AutoModelForSeq2SeqLM.from_pretrained("google/flan-t5-small")

tokenizer = AutoTokenizer.from_pretrained("google/flan-t5-small")

inputs = tokenizer("A step by step recipe to make bolognese pasta:", return_tensors="pt")

outputs = model.generate(**inputs)

print(tokenizer.batch_decode(outputs, skip_special_tokens=True))

In the example above, the model provides a simple recipe for bolognese pasta, showcasing its ability to generate coherent and contextually relevant responses.

Advantages of FLAN-T5

One of the significant advantages of FLAN-T5 is its instruction-finetuning capability. This means that the model has been trained not only on a dataset but also on various tasks, which helps it understand the context better and cater to specific instructions given in natural language. Additionally, the model’s flexibility is evident in its range of sizes, which supports a wider array of applications and computational budgets.

For developers and researchers interested in exploring FLAN-T5 in depth, the original checkpoints and a wealth of resources, including API reference, code examples, and notebooks, are available on the T5 documentation page. The model card also provides valuable insights into training and evaluating FLAN-T5.

Conclusion

FLAN-T5 is a testament to the ongoing evolution of language models. With its ease of use and robust performance, it is an excellent choice for NLP practitioners looking to push the boundaries of machine understanding of human language. The Hugging Face community continues to support and contribute to the development of models like FLAN-T5, making advanced NLP more accessible to the wider AI community.

The post FLAN-T5 appeared first on AI Parabellum.

[ad_2]

Source link